This is a GDNative visualization tool/library that I made for sequenced music formats... It's not quite done yet, unfortunately, with the most obvious format (MIDI) being ignored here. What formats this library implements for are a broad class of early digital composition formats called Music Trackers. These were very common back when audio compression on devices and computers was still not a thing and hard disk capacities were still pretty low. If you have played freeware, shareware or DOS games uptill around the early 2000s, (or went on a voyage across the sea, hehe), chances are that you have already heard music made, and played, in one of the various music tracker formats (called Modules), such as ImpulseTracker (IT), Fasttracker (XM), Soundtracker (MOD) and many others.

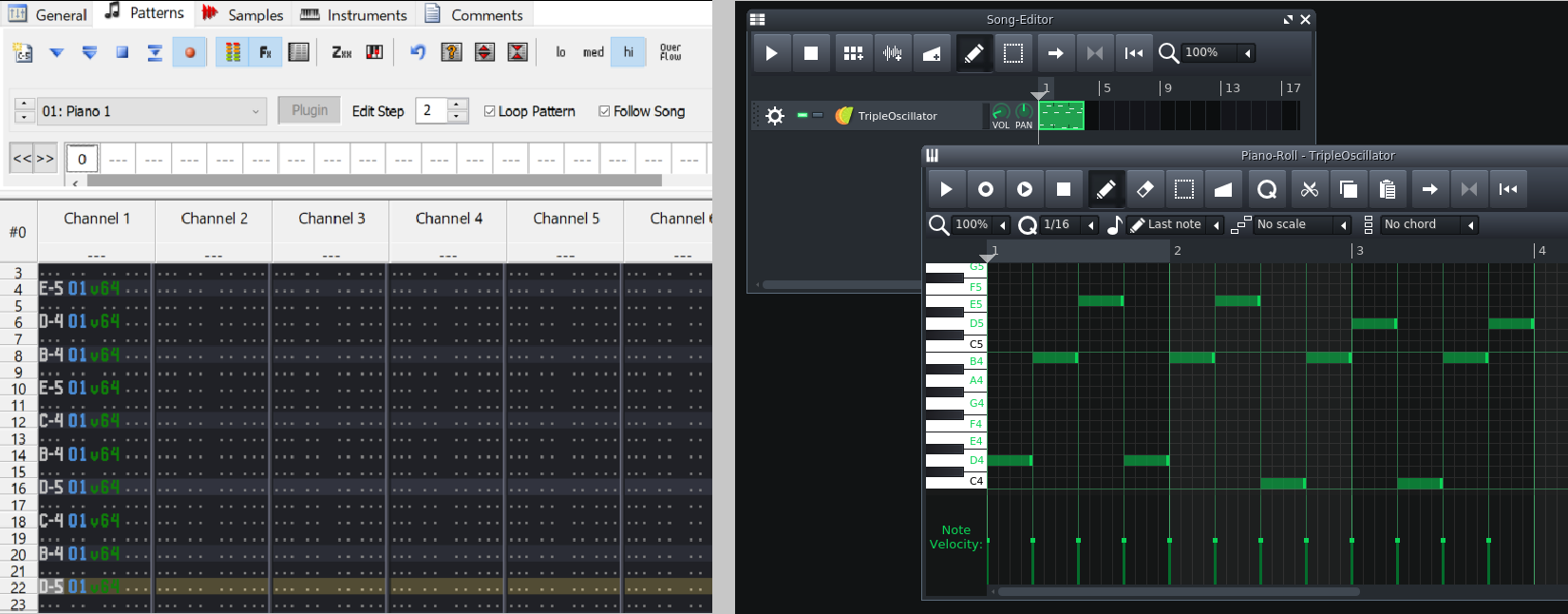

These formats usually work by sequencing a set of small audio files (called samples), such as that of a drum, a flute in an Excel-like spreadsheet view where you can add notes, called the pattern. This is different from what you may see in a modern Digital Audio Workstation (DAW), where each instrument may get an individual pattern, and you enter notes into what is called a piano roll editor, where the visual height of a note represents how high the note will be, and the length of the note represents how long it will be played. Here's a visual example:

MIDI on the other hand, only contains note data, and what instrument to use to play the note. Additional information may also be included, such as volume control at a certain point in the duration of the song.

Why Do This?

While music visualization tools probably already exist in certain places and programs. They are nowhere near as ubiquitous or ar rarely used to visualize the music itself. Often times, these visualisation tools generate the visualisation based on the waveform, rather than the arrangement of the notes, at least from what is apparent. Take this music video for example:

The ball thing, as you might be able to see, isn't actually reading the note data. It's most likely using the frequency spectrum to generate its movements. I think this is frankly an underwhelming use of visuals. There is a lot of untapped potential here to create procedurally generated, or manually crafted visuals using the entirety of the Song's data. The sounds used, the note placement and every other parameter. Imagine waves and ripples being created according according to the note pattern, blinking lights based on the current pattern of the drums being used and much more.

The closest thing I have found up till now that resembles what I want is Animusic, a MIDI Visualization tool. Here's a demo of that:

Unfortunately its unmaintained, closed source and I can't probably modify too much from what's already there to suit my needs. So what can I use or extend to make "dramatic" visualizations, do so with almost complete creative freedom, almost a blank slate, and still be able to do it with relative ease? Godot, of course!

The Requirements

Of course, Godot doesn't ship with a MIDI reader or a Music Tracker File reader, so we're going to have to implement our own. That means more C++ code!

Reading (and Playing) MIDI Files

There are several MIDI renderers available on the Internet, such as Fluidsynth, WildMIDI and Timidity. However from a quick look at all of them, the ability to get the actual note data seems to be not there, or at least, not obvious. That means we will need some other library to fetch the Note data, and at the same time render the song to the speakers. That increases the complexity of making the feature quite a bit (or at least doing more research), so I put it aside in favour of tracker music files for now.

Reading (and Playing) Tracker Modules

Working with these is a much simpler task. Tracker Music files are completely self-contained. The audio samples of the instruments, the metadata of the song (like the author name, comments, title and other stuff), and the note data are all put into a single file and can be played without any additionenal dependencies aside from the player itself. We'll be using libopenmpt to read and render our music. Libopenmpt has quite a large number of supported formats.

Writing the GDNative Library

Writing the GDNative library wasn't really a hassle this time around, now that I had exprerience with the last one. There was also a lot less of "shooting into the dark" since we're making the library do the job it's intended to do. However there were still some technical gotchas with respect to Godot that you might need to keep in mind if you are dealing with something like this.

Integrating the library is slightly less elegant than I initially expected, but it's not much of a hassle even then. Let's first see how audio players work in Godot.

AudioStreamPlayer and Friends

The AudioStreamPlayer and related classes are used to play music and sound effects in Godot. They require an AudioStream class or its deriviatives, which may be an AudioStreamOGG, AudioStreamMP3 and others. It supplies the actual sound data to the player. For our purposes, we will need the AudioStreamGenerator class, which will allow us to supply sound data directly through GDScript or C++.

AudioStreamPlayer has a function called get_stream_playback() function, which returns an AudioStreamPlayback object, which isn't really useful for normal stuff, but when the stream of the AudioStreamPlayer is set to AudioStreamGenerator, it returns an AudioStreamGeneratorPlayback instead. This object is the one that actually allows us to push our rendered Audio data to the player.

Now, coming to the supposed inelegance. There are two problems here. The way we are setting the generator stream and Playback object, there isn't a way for us to actually create a neat little derived class or object that we can just assign to the AudioStreamPlayer that would allow us to use the audio player like in any other normal case (such as with audio files). We would either have to manually pass the playback object to our library, which would then pass the audio data to it, or derive the AudioStreamPlayer class itself and then automatically set the generator stream object to it. This would work but that also means the stream object would stick out, and we would have to prevent the user from setting that by using a custom setter instead of being able to hide it. I've gone with the first approach for now because it's the most obvious one here.

The Library

Writing the library mostly consisted of porting all the libopenmpt functions over to the GDNative class. The class more or less acts as a wrapper around the openmpt::module object, which holds the module file data, metadata and rendering info. The audio data/buffer filling function of the library runs on a separate thread, thus not making us have to run a fill_buffer() function or something at every iteration of _process().

There's one small problem with the library though. Libopenmpt currently can't render audio using external VST plugins, such as synthesizers. Since I am using a lot of the external synthesizer plugin (Vital), I can't actually render the audio live, or use the channel volume values. So as a workaround for the visualization, I actually had to play pre-rendered audio and drop the idea of using the channel volume values in the visualizations.

The Test Program

Having this library means we have quite a few parameters at our disposal to make our cool visualizations, such as:

- The tempo of the song.

- The current notes being played, what instrument is playing them, at what volume and with which parameters.

- The row-wise and time-wise position of the song.

- The current volume of each channel (column).

- The Global volume of the song.

- The metadata of the song.

Now we need some way to test all of this stuff, so I made a demo application to do that. Here's a recording of it. You'll be able to see the individual columns jumping up and down with the volume. They're color coded as well!

Pretty cool right? No? Okay, let's get to the actual visualization then:

Designing the Visualization

The main purpose of this visualization is of course, to show off the library. Before I start describing the whole thing, I think it would be easier if I just showed you what I ended up with first.

Pretty cool right? No? Alright, fine, let's just get to what I did here. As you can see, each instrument that plays in the music has a label, and a progress bar of its "period", after which it repeats itself. The labels are moved around using an AnimationPlayer, and of course, updating the progress bar and "bumping" the label's color according to the beat is done using the library. I intended the little dotted line-rails that you see scrolling around to move at the speed of the beat, so that the line would sweep one dot at each beat. I dropped the idea halfway through because it looked like too much work for something a viewer might easily miss. I could have used the channel volume values to actually "bump" the label's color, but because of the plugin issue mentioned before, I had to use tweens instead.

Making the Music

I'll try to describe the music making process more this time around. This is a pretty hard and awkward task to do without actually knowing how to describe music, or any amount of music theory, but I'll give it a try here anyway. The way I am using the word "beat" here is mostly informal.

Let's first try to desccribe how the song progresses:

- The song starts with a bass drum, the one that goes "bum" and a snare drum, that goes "diss".

- Then a clap the snare is added, which is used to make the snare sound better. I think people pull that trick in their songs to make their snares sound punchier. Maybe getting better at making drum (precussion) insrtruments is a better idea.

- Then the highhat is added, which increases the complexity of the drum pattern. It becomes hard to tell immediately what the drum pattern is like.

- And finally, a cowbell is added that plays in a fixed beat except for at the end of its period. I don't know if its just me or not, but even though the cowbell's beat pattern is really simple, the entire song as a whole seems to sound more complex and the cowbell seems to play at variable times rather than fixed (ignoring the last one).

- Then the first instrument that isn't a drum, the "pluck synth", is added.

- Now we get to the "main event" of the song. The "saw synth" instrument is added, the beat pattern of the pluck synth is changed, and we add a "backing" track, that's an octave lower than the current pluck synth track.

- Finally, the "square synth" instrument is added, and then then the song proceeds to fade to silence.

I think having seen the visualization will help out a lot to actually understand what I am saying here instead of just processing this as incoherent rambling.

Now that that's out of the way, let me try describing how I made the synth instruments:

- The "Pluck Synth": This synth pluck is of the same class as the plucks you might hear in songs like this one. These sort of instruments are usually created by playing a saw wave, then sliding a low pass filter down to the certain point, and then almost instantaneously ramping down the volume.

- The "Saw Synth": This synth is created using a phenonenon called "unison." Unison refers to the phenomenon of playing multiple instruments, either of the same or different type, together, at the same relative pitch, but possibly separated by a constant amount of notes or octaves. You can hear an example with the "background" string instruments at the start of this song. (Please do note that I'm just going off of what Wikipedia's telling me, and the description just seems to match what I am doing in the synthesizer.) In addition to that I've added a notch filter (a sharp band pass filter) that periodically goes up and down that creates the sort of "widening" or "scraping" effect that you might be able to hear.

- The "Square Synth": This is the simplest out of all the three instruments used. It's just a square wave with a static notch filter on it, some reverberation (reverb) added to it and "note gliding" (also called portamento) enabled for it.

(I realise that these descriptions are grossly inadequate without any background knowledge of how synths work. I'll probably write an article about all of the jargon and techniques I've learnt about music up till now. It'll be fun to learn just how ubiquitous the saw wave is in digital music!)

And that's all for the song. Although it achieves its purpose, it doesn't show as much musical variation as I would like it to have. With my songs up till now, I usually end up writing only one, or maybe 2 "main tunes", and then repeat the main tune till the song ends. The song of the previous video is somewhat of an exception, although still not with as much variation as I would like. In the future I want to make myself (rather, make myself stick to) writing more elaborate and detailed songs.

Final Result

You can find the GDNative Library here. As before, if you find any problems in the library that you want to get resolved, feel free to open an issue on the Github page.

Conclusion

It's been two months and I'm still updating this website! That's a record in persistence for me, at least in terms of what I'm showing publicly. But besides that, this tool will reduce the effort of making animations like this quite a bit. After I (possibly) implement the MIDI reader I might be able to create an entire automated visual system for music! Even for stuff that doesn't have a MIDI track or any sequencer data, we can use MIDI or a tracker module as an intermediate format to create the visualization. There are a quite a few possibilities here. We can also probably use this as a teaching or a music communication tool!

Of course, I don't want to skimp on actually making things that are good to watch and see, and with my current work the amimations seemingly look more like afterthoughts rather than the main goal. So for the next few weeks I'll focus on getting something out that is worth watching. It's something that will only catch on as I spend more quality time on it so I should do it whenever I can. I think I went slower during this month. Will have to pick up the pace from the next onwards.

Well, that's it for now! See you at the end of next month, probably.