Just here for the code? Click here!

This is an animation thing that I made in Godot (3.x) as a sort of "intro" sequence to the whole "Visphort" thing. To be honest the animation doesn't really have much going on, but being able to put at least a portion of the things that I had in my mind has been a very useful experience. One small step towards the completion of the rest of the stuff.

Watch it here!

Inception

For a long time I have had these visualizations in my mind that come up when I listen to music or just spontaneously. This is one of them. I didn't get around to actually doing anything with the unfinished music until a few months ago.

Tools Used

The animation made here is made in the Godot Game Engine (3.x). Why Godot and not Blender? Well, the animation seemed "mechanical" enough that manually setting each keyframe in Blender's animation editor would seem tedious. Of course, what is done here could be done with some python scripting, which is something I am planning to visit in the future but at the time, I didn't really want to bother with it.

Secondly, rendering in Blender is slow while simple programs in Godot can run on my low-spec Computer (by today's standards) on 60 fps, which this did and therefore could be captured live (unfortunately that did not work out as intended, as you will see further down the article). The third reason is that I wanted to test out the 3D capabilities of Godot, which I hadn't done till now.

Building

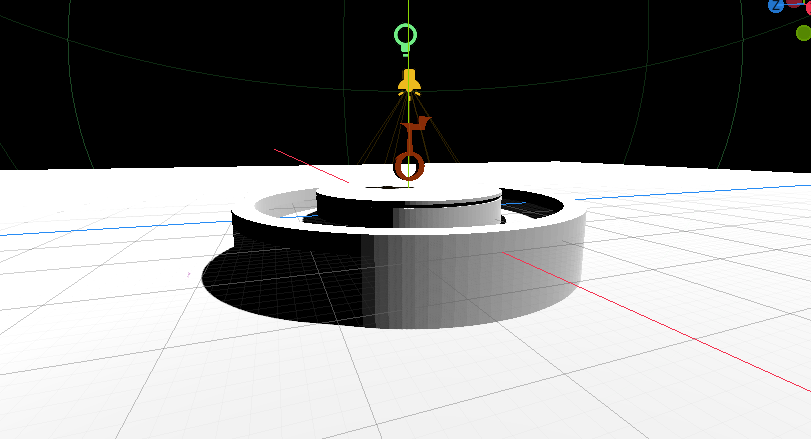

I worked on this on and off while working on other projects (like this website!). I just started out with the stock mesh instances that were available with MeshInstance (the cylinders, the planes, etc), and they were adequeate for what I initially had in mind. The orange logo thing is just a SVG extruded out into Blender, then imported as a model. The background is one of the many stock, royalty free night sky images you can get on the internet. Here's a screenshot of that early stuff. Not too different:

Then I decided that I wanted those moving ring things and for that, I would have to make custom models. Eventually I ditched the MeshInstance stock meshes and instead decided on making the whole scene as a model in Blender.

Spinning the Camera

The camera is spun around with the help of another animation player that is set to loop, and the speed of which is controlled by the main animation player. A custom NodePath is used to control only the value of the rotation vector that rotates the camera along the vertical axis on the center of the "table."

That's also something to keep in mind as well if you want to do this sort of stuff: you can use other AnimationPlayers in your main AnimationPlayer to make such repetitive animations and use NodePaths properly.

Another very important thing that I noticed while working on this is how important using nodes as pivots are for rotation, especially in 3D spaces. Using nodes allow you to apply transformations to your concerned node without having to do anything and still being able to operate on it without having to worry about applying a prerequisite transformation manually.

Music Syncing

If you have seen the animation, you might have noticed that the logo thing jumps up and lights up along with each beat of the bassdrum. The Godot BPM Demo shows a pretty good way to achieve that. I adapted the general idea a little bit to allow the beat to b

The "bumping" animation was initially implemented as a set of 2 tweens, one that goes up and the other that goes down after the first one completes. This was later replaced by just another animation player that describes the bumping, and played as needed. As for starting and stopping the beat triggering, simply a boolean is used to stop the beat loop from executing that animation.

Things are slightly different, however, when you are not playing this in real time. This is discussed below.

Actually Recording the Animation

Welcome to the hard part!

Godot (3.x) doesn't have a mode that allows you to generate a video of the application output, and that makes sense, since Godot is a game engine and not animation software. Fine, so we could just use a screen recorder, right? Well, if you had a high-spec computer, maybe. Unfortunately, I don't. Using a screen recorder in this case would just result in a choppy, low quality recording because of the resources used by the recorder while the game is running, and on higher resolutions like 1920x1080, the game itself runs choppily.

There are a few ways we could proceed from here:

Screenshot Concatenate Route

Several utilities/demo projects like this one and this one allow you to create a series of screenshots of each frame. Problem is, this is extremely slow. It probably took 5 seconds to render each frame, and probably would take entire days before a recording is completed, and on top of that, encoding all of those screenshots into the video file and then adding the song would take another few hours. Pretty much impractical, so this is not really an option.

Porting to Godot 4 Alpha

Godot 4 has an inbuilt Movie Maker Mode, which allows you to render videos (without the sound, of course) in non realtime. Just what I needed! Just import the project into Godot 4 and we are good to go, right?

Eeeh... No. Not fixing that.

Importing the project into Godot 4 screws up all the materials. Plus, all the inspector angle values are now in radians as well so the animations are broken. Not really willing to go through the effort of fixing that.

GDNative+FFmpeg Route

So probably the most difficult option in terms of the technical aspect, but once done it would prove to be very useful, at least until Godot 4 comes out. Long story short, it works! If you want to skip all the technical crap, you can skip over here.

This would involve writing a GDNative Library/NativeScript that uses FFmpeg (specifically libavcodec, libavformat and libswscale) to encode the dumped Viewport frames into a video file. Basically the screenshot concatenate route, but instead of saving the frame to a file, we pass it on to FFmpeg.

First, what we want to do is learn how to write and use NativeScript. We can start with the example GDNative projects. I had first tried to go with the C example since FFmpeg is written in C, but I quickly realised how much of an effort will be. You see, GDNative depends on a monumental amount of preprocessing both done using python scripts and C++ template magic, such as conversion of Variants to their underlying types, repeated allocations and deallocation of primary types such as strings, registering variables and declaring get/set functions for them, etc.

This is mostly due to the fact that GDScript is dynamically typed, and there is no easy way to express that in C. (Though I would have to look at other scripting language APIs of say, Lua and Python to provide proper judgement in terms of their C APIs since they are primarily built for writing libraries in C). A lot of this pain and tediousness is alleviated if one writes a plugin using the C++ API, so C++ it is. I won't go through the specifics of actually writing and compiling GDNative but its pretty straightforward once you look at it.

Now we need some sort of example of how to actually use FFmpeg to perform the encoding. You will find this in muxing.c, in the doc/examples directory of FFmpeg's source repo.

Encoding and muxing are 2 different things, actually. To explain this, let me explain how audio and video formats store data. You see, media types you find on the internet such as MP4, WEBM, PNG, JPEG can be often roughly divided in to 2 parts: the format, which stores the metadata of the file and describes the file structure, and the actual encoded data itself. This means that we can divide the process of creating a media file into 2 parts: writing the format information, such as the headers and metadata, and actually writing the encoded video or audio data. The program that creates the encoded data is called a codec. This is handled by libavcodec of FFmpeg.

What you will often find in modern media file formats such as WEBM and MP4, is that they are called "container" formats. This means that they serve simply as a "container" for the encoded data, and the encoded data can be from any codec. The format merely serves as a "vehicle" for storing the encoded data form a compatible codec. Thus, the file format and the encoded data are not necessarily tied together.

For video file formats such as MP4, WEBM or AVI, they often contain Audio as well as video that are played side by side. Bits of audio and video are often stored in an interleaved fashion alternatively within the encoded data, which allows a video player to simultaneously get both the video and audio data, and play them. The process of interleaving this data is called Multiplexing or Muxing, and the process of converting this interleaved data back into separate raw audio and video data is called Demultiplexing or Demuxing. This is handled by libavformat of FFmpeg.

Do note that Demuxers and Muxers also do the work of reading and writing the file format and associated metadata as well, since muxing or demuxing may be specific to the file format we are reading and writing to.

What you might have inferred from this is that we need to first Encode the data, then Mux that into a file. That's exactly what muxing.c shows us to do! So I just went ahead and adapted that example to a nativescript class, forming 4 functions. The first for initialization, the second for starting the recorder, the third for calling the recorder every process loop to dump and encode the frame, and the fourth for finishing the recording.

So after a lot of back and forth, debugging and all, I managed to get the recorder working. The 3-minute 1920x1080 animation that you see in the video was rendered in about 40 minutes!

If you are curious about libswscale, it is used to convert our viewport pixel data format into a pixel data format that the current ffmpeg video encoder accepts. A conversion already has to be made by the Godot Image object itself to convert the pixels into a format that libswscale can handle (currently its hard-coded for AV_PIX_FMT_RGB24, or RGB8 as it is called in Godot). So yeah, 2 conversions in total.

Multithreading

Of course, 40 minutes is still kind of slow so I wanted to separate frame dumping and video encoding by creating a buffer queue for frames to be written to (inspired by this post). Unfortunately its buggy and the video flickers a lot. No idea if its because of a bug in the buffer queue or not. There seems to be no noticeable speed increase, so I have shelved it for now.

Instead of the buffer queue, it may be useful to explore the options for multithreading in FFmpeg itself.

Final Result

And there you go. You can find the code here. Let me know if you run into errors or want to add more stuff! But for now I am done with this.

Non-Realtime Beat Syncing

For beat syncing on a non-realtime scenario like this one, I simply used the main AnimationPlayer's current position, minus the start time of the song, as the current position within the song. It seems to work well.

Post-Processing

Pretty straightforward. I added in the song in post by using a video editor and that's it.

The Music

Almost forgot to say anything about the song. The music in this video is made by me and just came up when I was screwing around with synths in LMMS and then later sequenced in a sequencer called OpenMPT. The instruments used in the song are all made using the Vital VST. Its a free plugin and open source too.

Conclusion

This write-up kind of became about the video rendering part rather than the actual video itself, didn't it? Heh. Well, coming to my actual thoughts on the animation itself, I'm glad that I was able to convert my thoughts into reality, even though its not what I ideally wanted the animation to look like. I also planned for things like stars to twinkle with the beat, and particle effects going all around the table thing, but dropped the idea seeing that my computer was struggling to render particle effects in real time and the fact that sequencing more complex patterns than a simple beat could get extremely tedious. (You can expect a library for triggering and reading sequenced formats like MIDI or MOD in the near future :)). As for the song, its okay-ish, but I am glad that I managed to make myself sit down and finish a complete song.

Nonetheless, I am happy to have finally put something out on the internet for people to see, and plan to use what I have learnt here in my future stuff. Maybe I'll try to do something similar in Blender but I don't think my laptop will be able to keep up with the rendering workload.

Well, that's pretty much it. Thanks for reading!